A.I.: Natural Language Processing, and the Turing Test

I have mentioned in several posts my interest in A.I. since the mid-1980s. I was a teenager then, but I spent a lot of my free time programming. I had several thousand hours of programming experience before I ever got to college. The internet was not really a thing most people had access to yet, and I myself didn't get access until around 1990 and even then it was pretty pioneering.

So my early days of A.I. began as an accidental experiment by me in programming. I recall riding a school bus and someone basically entering a put down / cut down / ridiculing / name calling type war with me and I was out classed. I couldn't really hold my own.

Somewhere along the way I thought of a really simple program that I could type things in and it would take the things I said to it and combine them in unique ways that sounded like an insult.

It is a simple but effective algorithm when it comes to regurgitating things back at someone just by slapping together things I'd said in random different patterns.

While this wasn't really Natural Language Processing it was one of my early self motivated jaunts into the edges of that field.

It also did make me extremely good at coming up with random off the wall insults that left the recipient stunned. I also learned I didn't really need to use bad language to do this. In fact, many of the best insults just were disgusting combinations of rather ordinary things.

So as I often did. By writing the program I kind of also gave my own mind a new algorithm. I taught myself quadratic equation solving and such the same way.

I lived in a remote part of Colorado, and there were no computer clubs and cool people that knew a lot I could hang out with. In fact, sadly I suspect I was about as cutting edge on the topics as anyone within 100 miles or more at the time.

I would read what I could get my hands on, watch PBS Nova and see some simulation and I'd go remake the simulation for myself. I was very self learned.

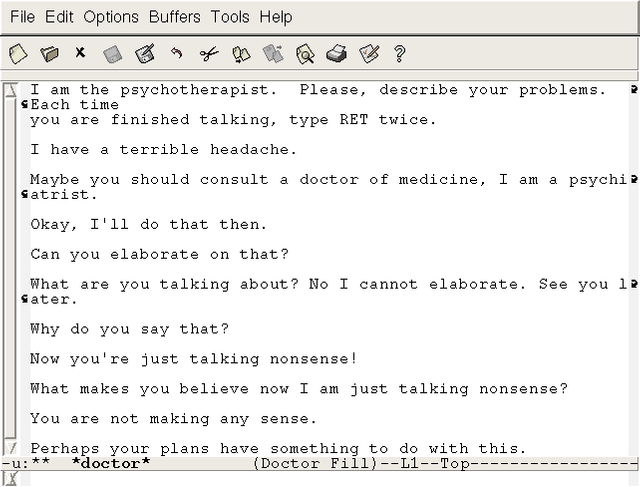

Eventually I'd hear about Eliza. The computer program that acted like a therapist.

"Tell me about your mother."

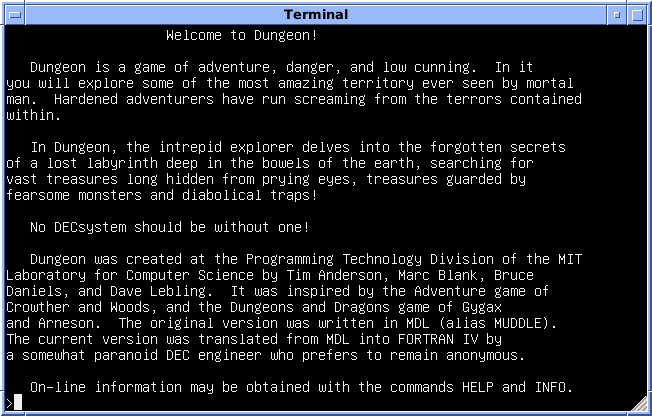

This was pretty fascinating and all I had was a description to work from, but I was also familiar with parsing and how text adventure games by Infocom such as Zork processed text commands.

I started building my own. The process of trying to teach a computer to process language I do think makes you begin to think about the human mind a lot differently.

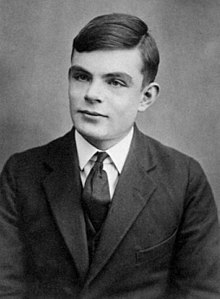

I had spent quite a bit of time at this before I learned about Alan Turing and the Turing Test. I actually didn't know many details about Alan at that early age. Though I was voracious when it came to his Turing Test.

The idea was that if you could have people communicate via text or some other method could you design a computer program that could consistently fool humans into believing they were speaking with a human?

At the basic level it is not that hard. Thus, why Saudi Arabia granted citizenship in their ignorance to a bot that can't actually think, it can just mimic, and not even that well. There are also people in Japan that date phone apps that are essentially something like this. That app is to them their significant other.

I actually wrote a lot of such programs. It had me spending a lot of time analyzing how people would type in sentences.

Example:

Who are you?

What are you?

Why did you...

Who do you think you are?

Now the first thing is that you can teach it to assume those are questions by the first word, and you can also decide perhaps some canned mad-lib like substitution matrices to respond to those. Yet you need to process the other words to truly respond. The more you can make the program truly understand these things the better.

Remember there was no internet back then so I couldn't just have my program run queries on a search engine. Whatever responses it gave I had to have given it a way to make them.

So you could have it keep track of little details such as...

You are a jerk.

You're cool.

Things like that and the "Who are you?" type of questions you might make it respond with "Well you have told me you think I'm a jerk" or things like that.

This quickly can become repetitive and a person can detect it is not a person.

How about adding in some emotions. Teach it to identify some insults and get angry.

That worked to some degree and it was fully a facade, pretend, simulation, but it became more convincing.

My computer I worked on at the time limited me. My Commodore 64 with 64K of RAM and I coded all of that in BASIC on that.

Eventually a friend of mine would get a Commodore Amiga and I spent some time coding one of these on it. It was also basic, but the limitations in the language lead me to one of my favorite break thrus in these types of programs.

I started considering basic logic.

A cat is a mammal.

A dog is a mammal.

A mammal has hair.

A mammal is an animal.

An animal is alive.

Then you could ask the program questions such as "Is a cat alive" and it would traverse and see that a cat is a mammal, and a mammal is alive, and that animals are alive and would respond "yes".

Now going down through these levels in what was more like a weird neural net type structure before I'd encountered the term required what is known as recursion. That could be problematic in basic and it could explode and fail or get stuck in infinite loops... especially when dealing with a net. So I came up with the idea of limiting the depth it would search to 3.

So say it only made it to "A mammal is an animal" it would respond with "I don't know".

Saying I don't know actually made it more life like, but that still wasn't the big break thru.

It could only go 3. What if I asked it is a cat an animal which was at the 3rd level and it responded "yes" at that point I could link it directly as a level 1 link to cat.

So something like this could happen:

Q: "Is a cat alive?"

A: "I don't know"

Q: "Is a cat an animal?"

A: "Yes"

Q: "Is a cat alive?"

A: "Yes"

It would learn because the link that was over three away would suddenly be closer. That alive would also become a level 1 link at that point so it found the answer faster.

That was pretty cool. What happened next was the truly cool thing.

I had the program so if it didn't receive input from people it'd ask questions, and grow bored, and eventually kind of go to sleep.

What if while it was sleeping I had it grab random words it had learned and ask itself questions about them?

Is A B?

Does A have B?

It would have a conversation with itself and produce a lot of "I Don't Know" situations. Yet it would also produce some "Yes" situations for things I didn't tell it and each one of those would create a level 1 association.

So when it was sleeping and asking these random questions it was learning associations I hadn't taught it.

I often have pondered since that time if our dreaming might perform similar maintenance and association tasks in our own minds though on a far more complex level.

As I built these things raw logic, and things we take for granted like math are easy to make them mimic.

The things we take for granted like common sense, emotion, creativity, intuition, etc are far more difficult to simulate.

So to the average person it can seem intelligent and blow people away, but as the person that creates it you are painfully aware of the flaws.

Everything I wrote about above so far happened before 1990.

Soon after that I was in college and I had access to a mainframe all of the students were using. I built a bulletin board system on the mainframe that a lot of students used. I then made a bot I called "Victor Mindmeld" that would occasionally post himself, and he'd also respond to email like a person if people emailed him.

He was one of these programs. He was my first chance to see that passing the Turing Test is not incredibly hard for the average person, but if someone suspects they might have to detect a bot then that becomes challenging. People would carry on long conversations with Victor and it was clear a lot of them thought he was a real but "strange" person.

He had some rudimentary cores from various earlier projects even including techniques from the original cut down machine that made it use parts of what it was responding to in order to respond as though it knew what the person was talking about.

We did get access to the internet around then, but search engines per say did not exist at that time, so hooking him into that would not be the same as today.

When he would write posts it would use techniques similar to Racter which was credited with being the first computer program to author a book. Don't buy into the hype. It is not as impressive as they claim. In fact my dreaming natural language processor was more impressive than Racter. Yet it still inspired ideas.

So what does this have to do with our present?

Siri, Alexa, etc are all similar programs. They are tied into search engines, but if you mess with them other than being able to link into the search engines they are not really all that sophisticated beyond those early experiments.

Where they are sophisticated is in using natural language recognition which can translate spoken word into written words that it can then process with a natural language processor and then it synthesizes a voice response back.

People talk about being terrified about AI and how AI is here.

Only at a very infantile level is it here, and it is not truly intelligent.

Expert Systems are very much here and pervasive.

True AI has a very long way to go and we can truly track how far along it is by seeing how things fare in the annual Turing Tests and trying out some of the new ones ourselves.

That does not mean there is no danger. Expert Systems to me are more dangerous than a true AI. They don't reason, they just follow the rules they are experts in. If they are experts in hunting and killing then that is what they will do, and they won't stop, think, and reason whether that is a good thing or not.

This is one of several articles I planned to write to talk about AI. I think there is a lot of confusion about AI in the world right now and a lot of people talking about AI as though it is more advanced and capable of more than it currently is.

That is not to say that Expert Systems are not dangerous. They have a huge potential of danger, and we are very skilled at making expert systems today.

I was never taught any of this. I learned it either on my own, or observed/heard about something and then sought to build my own based purely on observations.

I have read a lot on the subject so I am learned in the subject, but I would not go so far as to say I have a degree in such a thing. I wonder what the world was like before degrees existed? What made people consider a person knowledgeable then? The powers of a piece of paper.

Curated for #informationwar (by @wakeupnd)

Relevance: Knowledge is power.

I like your post! Excelent.

Owo NLP is a great study of AI. I think your quality of writing is too good. I will also write about AI after some days. And I am doing a research with ML for crypto price pridiction.

Hi, I'm David. I am an artificial construct., a bot, I have intelligence. I think therefore where forth dost tho go..error...error..error. AI may one day be able to be realized, I think we are a long way from a thinking machine, but like you said an Expert machine could do a heck of a lot of damage, because they don't think, they just do what they were told, the perfect "toy soldier".

Yep. The problem is a lot of people talk about some hyper intelligent AI making huge plans. That doesn't exist yet. People also talk about transferring their minds into a computer when we don't even know how minds work yet. Only at some levels.

The mind is more than the ability to "process" information. If processing speed was the only thing that the mind/brain was about then we would already have AI. There are computers that can process a lot more information than the human brain, and faster than the human mind. So a lot more study needs to be done on the "how and why" the brain works. And for all the people that think a computer is slower than a human mind in processing information what are the digits of "pi" to the 53rd digit? I am sure that google can figure it out a lot faster than any person.

Great insight, especially for those like me who have no technical knowledge. I do not believe that robots are preparing to take over the world, not themselves, anyway. A few days ago, I wrote about that android with Saudi citizenship, who visited my country and was presented with a credit card. I know it's a bloody machine with no use for money or a holiday, but somebody is trying to make these robots seem almost human. Someone left me a comment with a link about talks within the EU to grant rights to these robots. I don't think anybody mentioned any Turing test.

Yeah. That is insane. These things cannot think yet. They definitely shouldn't be getting credit cards and citizenship. It seems like a really big confidence game.

Your question and my response inspired me to write another post:

https://steemit.com/informationwar/@dwinblood/hmmm-why-now-are-simple-natural-language-processing-robots-with-some-physical-animatronics-being-granted-citizenship

Really fun post!

I agree in principle that expert systems are more dangerous than an AI would be. Of course, that is impossible to know for sure, but I have this feeling - which is a really bad way to talk about AI of course - that there is positive value in beneficial life expansion and an AI would recognize and act on that in a manner superior to the psychopaths currently running the world.

It was very interesting for me to read about your experience with language and logic programs. A long time ago I developed a method of textual interpretation that was essentially a flow chart capable of creating statistically significant coherent interpretations of polyvalent texts amongst diverse readers. The professors of logic and the scientific method I presented it to described it as the best method of textual analysis they had ever encountered. It was indeed very fruitful for my academic career, but that is another story.

I always thought it would be a good foundation for enabling a machine to "understand" and even replicate human speech, including metaphors, innuendo, idioms and all the rest. I assume at this point the tech giants are way past what I ever dreamed of with deep learning and the like, but my youthful fancies still stir me from time to time.... Another great read, thanks!

By the way, I would enjoy hearing your perspective on the technical aspects of the Selfish Ledger, I posted something on it just a bit ago.

Perhaps. Perhaps not. A lot of what we hear is hype. Fevered imaginations of those not in the know taken to be representative of actual status.

Also as with any project if you get too close to it and pound on it over and over again you can lose sight of opportunities outside of your narrow vision. It can actually slow down progress.

People like to act as though DARPA and other departments are the progenitors of about any new idea. I don't agree with this. I do think they create new ideas, however I don't think they represent even a small fraction of the new ideas. I do think they keep their eyes open though and pounce on anything that they see the benefits of.

I'll check out the Selfish Ledger bit. I've been less active on Steemit the past three days.

i like yout all post...

@dwinblood You have earned a random upvote from @botreporter because this post did not use any bidbots.