How to build trustworthy AI products

You can’t just ‘add AI’ to a project and expect it to work. It isn’t magic dust that can be sprinkled on a product.

The key to building systems that are integrated into people’s lives is trust. If you don’t have the right amount of trust, you open the system up to disuse and misuse.

This post (and this corresponding talk below) was originally created for product people getting started with data science, machine learning, and AI as part of the Product School on May 30th. While there is a brief introduction to AI/ML there are a lot of things that more experienced practitioners can learn about trust in AI.

Trust 101

The reason that trust is important is that it helps facilitate cooperative behavior. This is the cornerstone to how we create complex societies.

It boils down to a few key things:

- Contract — written or unwritten

- Focuses on expectations for the future

- Based on past performance, accountability, and transparency

- Builds slowly but can be lost quickly

What does trust have to do with machines?

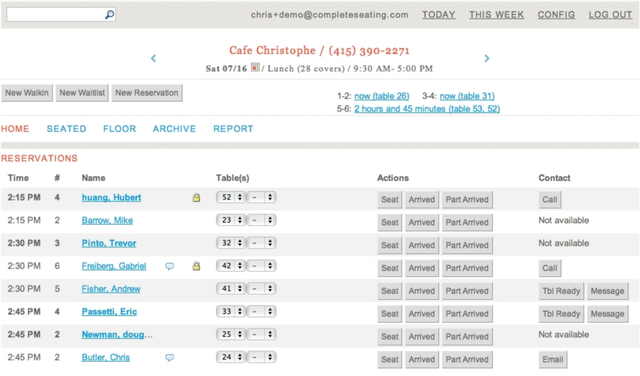

When I was working on a startup in the restaurant space called Complete Seating we built a lot of features to help hosts manage their dining room floor. We included intelligent waitlist times and automatic table recommendations.

We called it analytics, business intelligence, prediction, and constraint programming back then but it would have been called “AI restaurant management” if we were fundraising today.

Unfortunately, we didn’t gain their trust because we lacked two of the three key aspects of trust: performance and transparency.

- Performance — it was a beta product and at first it didn’t live up to the expectations. Once the service did start performing as it should we had a big hill to climb up to rebuild that trust.

- Accountability — when something went wrong we would always answer their call or show up on site to help out. They knew we were trying to help but it still didn’t fix the other two issues.

- Transparency — we didn’t give them good abstractions so they could understand what was happening behind the scenes. They wanted to apply their expertise and they didn’t know what we were covering or not.

We initially attempted to address these issues by providing more context to the hosts.

When looking back we should have started simpler by pointing out potential errors the host would make rather than obfuscating the entire seating engine.

How do machines build trust with humans?

We need to keep the human at the center when we think about building trust with them. This is one of the biggest dangers I see with AI in the coming years.

Using the same framework we can think about machine building trust as the following:

- Performance — this isn’t as simple as traditional accuracy, precision, and recall. We need to be worried about what the right metrics are towards human purpose.

- Accountability — there are many ethical and legal questions that need to be answered on what responsibility the designers of the systems need to take in their operation.

- Transparency — how do we build systems that are interpretable, provide adaptive action, give the feeling of control, allow intervention by humans, and get feedback to improve the systems.

Check out the talk for a deep dive into each of these key aspects.

What is the right level of trust for machines?

In Humans and Automation: Use, Misuse, Disuse, Abuse, Parasuraman & Riley, 1997 they discuss the various ways that people use automation based on trust:

- Use — “[Voluntary] activation or disengagement of automation by human operators.” This is when there is the ‘right’ amount of trust.

- Misuse — “[Over] reliance on automation, which can result in failures of monitoring or decision biases.” This is when the human trusts the system too much.

- Disuse — “[Neglect] or underutilization of automation…” will happen when the human doesn’t trust the automation enough.

- Abuse — “[Automation] of functions… without due regard for the consequences for human performance…” This happens when the operators (or those impacted) are not taken into account when designing automation. This is one of the biggest dangers I see with AI in the coming years from my point of view.

How can we learn about trust in machines?

We can learn about expectations and needs of trust through prototyping and research.

When prototyping, you don’t need to actually build and train models. You just need to consider a few different situations and fake it:

- Correct operation or ‘happy path’

- Incorrect operation — false positives/negatives from the automation

- Both sides of the borderline of misuse/disuse to understand the ‘right’ trust levels

- Appropriate scenarios for feedback from human to machine

- State communication from machine to human for your abstractions

I have also found that when starting to build using a “Wizard of Oz” or “concierge” approach to MVPs for AI can help you focus on what you really need to learn.

In conclusion

Remember:

- Using AI is like any other technology that has tradeoffs… and you should be aware of the nuance.

- Build for the right amount of trust — not too much or not too little.

- Trust is based on performance, accountability, and transparency to humans.

Check out the video for the full talk and more details for each of these points.