Artificial Neural Networks(ANNs) and How they Work

The first word that comes to mind when the word neural is mentioned, is the brain. ANNs are computer programs inspired by biology and designed to simulate the modus operandi of the human brain as regards information processing. This simply means that ANNs learn by recognizing patterns, relationships in data and experience and not from programming.

Brief History of ANN

Artificial Neural Networks are not a new concept as the concept dates back to the 1940's which saw the emergence of Hebbian learning which means unsupervised learning, a hypothesis by a Canadian psychologist known as D.O. Hebb. The 1960s us Perceptrons which is similar to the modern day general artificial neural network, specifically the multilayer perceptrons. Soon after perceptrons, the growth of ANN was stagnated if not frowned upon as scientist didn't have the computing power required by complex artificial neural networks nor did they have the data to feed them. This stalled the development of artificial networks. But a lot of this problem is over now as our computers are so much faster and thanks to the invention of the Internet, we now have access to limitless data.

Neural Network

Let me begin by saying that there is a difference between neural networks and artificial neural networks although common to find these being used interchangeably, it shouldn't be so. The latter is used to refer to work in computational neurobiology(cognitive science) which involves the use of mathematical tools and theories to study how information is processed by actual neurons and synapses in the nervous system as opposed to the artificial neural network.

Before we dive deeper I would love to introduce you to an interesting theory known as the computational theory of mind(CTM).

The Computational Theory of Mind(CTM)

"The mind is a Turing machine", sound familiar? maybe not but if you have ever come across this slogan, its origin is from the classical computational theory of mind(CCTM). CTM is simply a theory that raises prospects that the mind is a computational system, a thinking machine of some sort. While it is common mistake to think that CTM represents the mind as a computer, it doesn't as that would suggest that the mind is programmable.

There have been so many criticisms and modifications to CTM but one of the prominent once is RTM(Representational Theory of Mind), which holds that thinking takes place in a language of thought known as Mentalese. Fordor who is the advocate for RTM argues that that thinking occurs with a combination of CTM and RTM which implies that mental activity involves Turing-style computations over the language of thought.

CTM is a broad and much larger theory for another day but I had to tip it in as Fodor(1975) proposes CCTM+RTM as the foundation for cognitive science. If the mind can be considered as a thinking machine, the question now is can machines think? are they capable of perception and a host of other mental activities based on experience?

The last five years have seen monumental heights in the field of artificial intelligence. Our computers are not only learning more but a lot faster, data is in abundance thanks to the Internet. Today we have self-driving cars, software that can create stories from pictures, programs that can profile one's behaviour based on past behaviours and so much more which means that things are beginning to fall in place as regards the advancement of artificial intelligence and artificial neural networks in particular.

How do ANNs Work?

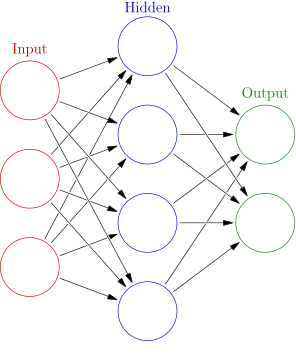

Inspired by the nervous system, ANNs are made up of neurons which are connected to each other in adjacent layers. The depth of the network is determined by the number of layers. In the brain, a neuron can receive as many as a hundred thousand signals from other neurons. In the case that those neurons fire, they exert effects which are either inhibitory or excitatory on the neurons they are connected to and if inputs from our first neuron add up to a particular threshold voltage, it too will fire.

Just like in the brain, signals also travel between layers but rather than firing electric signals, ANNs work by assigning weights to different neurons. The effects a neuron or layer of neurons has on the next layer is determined by how heavy the former is and the final layer will make use of these weighted inputs to produce an output. (N/B Layers are simply sets of neurons).

For instance, we want an ANN to select photos that contain a dog. As we all know, there are different kinds of dogs which look different and our of different colours, shapes and sizes and most times pictures of them are not taken at the same time, light or angle. To help the system recognize dogs, we have to come up with thousands of samples of dog faces which we have identified and labelled as "dog", and samples of objects which are not dogs which we have labelled as "not dog" which we use as training photos.

We then feed these images to the ANN, as the data passes through the network, neurons assign weights to the different elements present in the dog photos. For instance, curved lines would be more weighted than straight once. After this, the final layer which is the output layer combines all the data acquired such as a black nose, whiskers, oblong face and sends out the result: dog. This method of feeding examples to the network so as to train it is known as supervised learning.

If the result matches with our generated label, good! and if not - say the image was that of a bear, the network takes note of the error, traces back and adjusts its neurons weightings after which the ANN picks another photo then repeats the process over and over again. As the network continues to do this, it continuously improves on its dog recognition capacity without really being informed on what makes a dog. The process of tracing back so as to improve is known as backpropagation.

Having understood how ANNs work, the big question is how do we know the number of neurons needed by a layer. There are three types of layers, the input layer which is the information or data we provide to the network, the hidden layer, which is where all the magic takes place and the output layer, which is where the results reached by the network are given to us as output. Originally, scientist believed that having a single input layer, one hidden layer and an output layer was enough to give accurate output and if it wasn't computing the correct value, more neurons could simply be added to the hidden layer. The problem with this approach was that ANNS were vulnerable to local minima which means that we would end up with weightings that gave the fewest errors despite being incorrect. We had created a system of linear mapping from the input to an output where a particular input will always give a certain output which implies that the network couldn't handle inputs it hasn't seen before which isn't what we desired or needed.

The above problem brought about the invention of deep learning which involves more than one hidden layer with a lot of layers and hundreds of neurons. This creates a huge amount of variables to be considered in a given instance of time.

As a result of deep learning, ANNs have become larger and can't be run in a single epoch(Iterating through the whole network in one instance) but in batches till an epoch is completed before applying backpropagation.

Types of ANNs

Apart from the addition of deep learning, its necessary to know that we now have a ton of diverse architectures of ANNs. The conventional ANN is arranged in a way that all neurons in a particular layer are connected to all the neurons in the next layer but studies have enlightened us of the knowledge that connecting neurons to one another using certain patterns can boost the quality of results depending on the scenario. This leads us to the different types of ANNs and I will be expatiating on two I find important, this blog post by Kishan Maladkar gives great insights into six types of ANNs being used today.

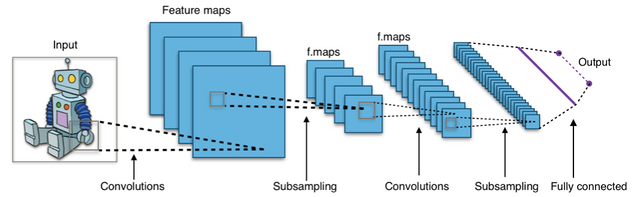

Convolutional Neural Networks (CNN) are ANNs in which the connections between layers seem to be arbitrary. Convolutional networks are set up in such a way that the number of parameters that require optimizing is reduced. This is achieved by taking note of the symmetry in the connection of neurons which enables us to reuse such neurons to create similar copies which don't require the same number of synapses. Due to its ability to recognize patterns in surrounding pixels, CNNs are used when working with pictures.

Reinforcement Learning in itself is not really an ANN architecture but a general term used to describe the behaviour of computers when they are trying to maximize a particular reward. However, this concept has been applied to build ANN architectures as I stumbled upon a YouTube video by SethBling where he creates a reinforcement learning system that builds an ANN architecture which plays Mario games on its own.

Conclusion

Having tried to pass the basic understanding of ANN across without leaving out core concepts I would say that you have an insight into the state of work in machine learning as ANNs are powering a lot of our activities these days like captioning pictures, animal and face recognition, language translation, autopilot aircrafts, target tracking, code sequence prediction, loan advisor, cancer cell analysis, routing systems and a lot of others you can think of.

Although a lot of success has been recorded, ANNs have challenges which include the time needed to train the network, massive computing power requirements and last is that for now, ANNs are black boxes as we really cant control the magic that goes on in the hidden layer.

References

machine learning

history and general overview of ANNs

computational theory of mind

how ANNs work

applications of ANN

Hello! I find your post valuable for the wafrica community! Thanks for the great post! @wafrica is now following you! ALWAYs follow @wafrica and use the wafrica tag!

@resteemator is a new bot casting votes for its followers. Follow @resteemator and vote this comment to increase your chance to be voted in the future!

Congratulations @davidekpin! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on the badge to view your Board of Honor.

If you no longer want to receive notifications, reply to this comment with the word

STOPDo not miss the last post from @steemitboard!

Participate in the SteemitBoard World Cup Contest!

Collect World Cup badges and win free SBD

Support the Gold Sponsors of the contest: @good-karma and @lukestokes