Lets Get It Twisted Backwards- The History Of Computer

In our world today we are living in what we call "The Computer Age". Most of our daily activities cannot be done without using computerized devices. The computer has become a major factor in defining our daily activity. For example, I typed this article with a computer, I posted it also with a computer, and you are still using a computer to read it unless you have developed a local machine that does not have any component of a computer to access the internet, which I doubt.

This multipurpose and indispensable technology has become part of our everyday life and it would be very difficult to imagine life without it.

This article was brought to you in conjunction with our partner <a and celeb examiner

Lets Do A “Roll Back” On What Computer Is—This is NoSql DataBase.

A computer could be defined with deceptive simplicity as a device which performs or carry out routine calculations automatically.

The deceptiveness in this definition would be owed to the fact that most people view calculation as just a mathematical process. But here it is, calculation refers to many activities which are normally not classified as mathematical processes. The act of walking towards something, for instance, needs many complex but subconscious calculations. Further, we carry out actions which do not include mathematics but calculative steps to acquire goal driven purposes. Some of the best computers today have been proven in many ramifications to be capable of solving different problems, from the balancing of a checkbook to the controlling of a robot and now plotting and implementing the computer into the human body.

The computer was invented, by numerous people who put in their time, effort, manpower to bring it into the world, they had in mind that they were not just inventing an ordinary calculator or a mere number cruncher. They understood the fact that they didn't have to invent a new type of computer for any new calculation but rather they could produce just a single type of computer that would be able to solve numerous problems and also solve problems that have not existed initially during the present time.

In this article today, we are going to look at the history of an amazing machine that has changed our lives and which I think we can't do without in our present age. Look around you, Just look around you, What do you see?

The computer: Early History

The invention of the computers we see today is as a result of the need for proper counting and documentation. In the early days, our ancestors who lived in caves had a lot of animals and other possessions and soon counting and knowing their exact amount became a really big problem. The idea of computer came from the need to solve this problem. Our ancestors started making calculative counts with stones and soon counting with stones led to the invention of some devices which are the precursors of the computers we know today. In summary…do you believe?

Here are some of the devices that led to the invention of the computers we know today.

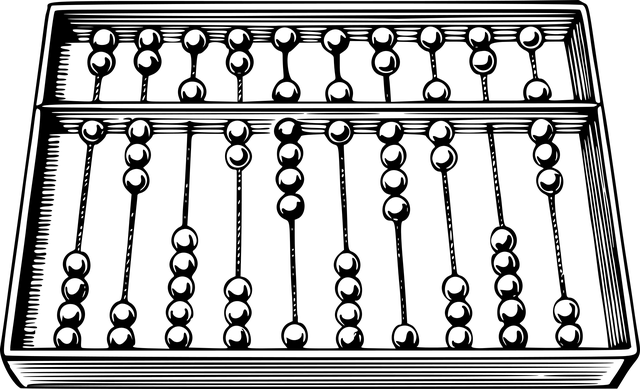

The Abacus

I can vividly remember when I was taught in primary school about the first computer and how it looks like. But this was the first known mechanical calculating device to be invented. It was developed first by the Egyptians in the 10th century and was later remade or reconstructed and given its final shape by Chinese educationists in the 12th century. The Abacus is made up of a frame that is made up of wood in which rods are fitted with beads sliding on the beautiful rods. I could imagine the look of fulfilment that our ancestors experienced when they accomplished this magnificent project.

However, the abacus is divided into two segments. The upper part is called "Heaven" while the lower part is called "Earth" . Now between the heaven and earth, which one will you go for. I definitely go for the heavens. Life is good but I want to change the planet. The abacus was used to carry out simple mathematical processes such as addition, subtraction, multiplication and division and numbers were represented in it by placing the beads at their specific places.

Analogue calculators: Napier's rods - slide rule…What the heck is this?

As time went by, the need for a better and more convenient calculating device arose. In the process of producing a better calculator John Napier of Scotland in the year, 1614 invented the Napier's Rods which was based on logarithms.. Could it be Log to base 10?

As everyone of us can attest to, it is easier to add up two 10-digit numbers than to multiply them together. The transformation of a problem of multiplication into one of addition is just what logarithms enable. The possibility and simplicity of the system of logarithms are due to the fact that when two numbers are multiplied, the logarithm of the product is the same as getting the logarithm of the numbers differently and adding them up.

By the year 1624, there were logarithm tables with up to 14 significant digits for numbers from 1 to 20,000. A lot of scientists immediately adopted the tool for carrying out difficult astronomical calculations because it saved labor and time.

Napier's logarithms was then, one of the breakthroughs that really simplified the possibility of general mechanization.After that, it didn't take long before other analogue calculating devices which represented digital values with analogue physical lengths were invented. An example is the Gunter Scale which was invented by Edmund Gunter in 1620. It was used to perform navigational calculations.

Edmund Gunter was an English mathematician. He was the hero who invented the terms ''Cosine'' and ''Tangent'' which is used in trigonometry today.

Also, in the year 1632, William Oughtred, an English mathematician built the first ever slide rule which was also based on Napier's ideas.

These analogue devices had some advantages and disadvantages when compared to some digital devices like the abacus. The most important thing then was that these designs and their consequences were what led to the evolution of the computer era.

Digital calculators:

A German mathematician and astronomer called Wilhelm Schickard in the year 1623 built the first digital calculator. He explained all about it in a letter he wrote to his friend and fellow astronomer Johannes Kepler. He gave it a name ''the calculating clock'' and modern inventors and engineers have been able to create more of this type of calculators using the details from the letters he wrote to Kepler.

Wilhelm Schickard might not have been the original inventor of the calculator. Years before Schickard's time ( a century earlier to be precise) Leonardo da Vinci had drawn sketches and plans for what would have been enough for modern engineers to create a calculating device with.

Between the year 1642 and 1644, A mathematician and philosopher from France; Blaise Pascal built the Pascaline which was the first calculator to actually be used. He built it for his father who was a tax collector and the machine could only carry out mathematical processes like Addition and subtraction.

Gottfried Wilhelm Von Leibniz a German mathematician and philosopher in 1673 built a calculating machine that was based on Pascal's ideas. He called it the 'Step Reckoner. It was able to carry out the multiplication by repeated addition.

The industrial revolution began in the 18th century and with it came the need to carry out some more operations efficiently. Charles Xavier of France in 1820 came to the rescue and met this industrial challenge when he came up with the Arithmometer. It was the calculating machine to be sold commercially. it was also based on Leibniz's technological ideas and could carry out processes like addition, subtraction, multiplication and division. The Arithmometer was very popular and it was on sale for up to 90 years.

The Jacquard loom

The Arithmometer after 1820 was greatly appreciated and used because it's potential to carry out commercial calculations was well understood. Several other mechanical devices which were invented in the 19th century could also perform some other functions automatically, but very few had something that could be attributed to the computer. There was one machine which was an exception to this though: the jacquard loom, which was invented by a weaver from France called Joseph-Marie jacquard in 1805.

The jacquard loom fascinated the industrial world at that time. It was the first device to practically process information. it functioned by tugging threads of different colours into different patterns with the aid of an array of rods. A jacquard loom operator could alter the weave patterns by controlling the way the rods move by inserting a punched card into the machine. The loom also had a device that could read the holes in the cards and would slip in a new card from a deck of pre-punched card and this made the automation of different weaving patterns possible.

The most fascinating thing about the jacquard loom was that it made a weaving process that was supposed to be a labour-intensive task to be done easily with the help of this punched cards. The cards were punched for different design patterns and once they were put in place, the weaving was as good as done.

The Jacquard loom gave important information and lessons to people who had the intentions of mechanising calculations. Here are some of the lessons.

- The way a machine operates can be controlled.

- A punched card can be used to control and direct a machine.

- A device can be directed to carry out different operations by giving it instructions in a kind of language. That is, making the device programmable

The First Computer:(Difference And Analytical Engine)

After the invention of the jacquard loom, all the ideas needed to invent the computer where now in place.

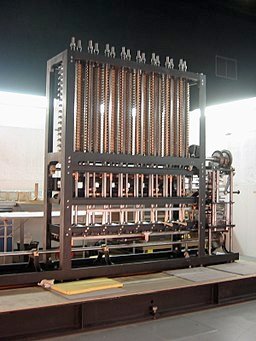

Charles Babbage was a mathematician and inventor from England: He was one of the founding members of the British's Royal Astronomical Society. Charles Babbage saw the need for a device that could carry out difficult astronomical calculations hence he began the invention of the difference engine.

The difference engine was more or less a digital device. It was more than the simple calculators which had already been produced at that time. It had the ability to carry out complex operations and solve complex problems with ease. Just like the computers we have today, the difference engine had a place where data could be stored before they are processed, and it could also give its output on some soft metal or printing plate.

The difference engine which was designed to be as big as a room was not completed by Charles Babbage. In 1833, he ran out of funds to still continue the project and this made his mechanist to resign thereby putting the device's production on stand still at that time.

The difference was completed and is exhibited in London at the Science Museum

During the time Charles Babbage was developing the difference engine, he started thinking and looking for ways to improve it. Before the funding from the government for his inventions ran out in 1833, he had finally drafted out something that was revolutionary: a real computing device which he called the analytical engine.

The Analytical engine was a general purpose device. It was designed to have four parts: the reader, which is the input system, the mill, which processes data and is similar to the CPU in a modern computer, the store, where data are stored, and a printer which was the output device.

The analytical engine would have been like the computers we know today if only Charles had not had some problems with implementation again. He stopped receiving funding from the government because they had doubts on his ability to produce the device. This was mostly on the basis that he couldn't complete the difference engine and the long time it was taking to complete this device.

The analytical engine was the first device at that time which deserved to be called a real computer although it was not completed.

Generations Of Computer

Most times, the history of the computer is a topic that is referred to the different generations of computers. Each of the five generations is often characterised by a major and massive improvement that changed the way the computer operates.

First Generation (1940-1956)

The first type of computers made use of vacuum tubes as their circuits and for memory, they used magnetic drums. The computers in this generation were often gigantic. The size could cover an entire room. The computers of this generation cost very huge amount of money to operate and coupled with a large amount of electricity they consume, their main cause of malfunctions was the great amount of heat they generate.

Second Generation (1956-1963)

In the second generation computers, Transistors were used to replace vacuum tubes. Transistors were invented in 1947 but were not used in computers until 1956. Transistors were so much better than vacuum tubes because they were smaller, cheaper, faster and used a smaller amount of energy. All these made the second generation computers much better and reliable than the first generation computers.

The problem of excessive emission of heat was also a problem with the transistors that often led to their malfunction and damage. The computers also used punched cards for input and gave out information through printouts.

There was also a massive improvement in ways instructions were given to computers. In the second generation, computer operators could now give the instructions of the computer in words due to the evolution of programming from binary machine language to assembly language.

The second generation computers were the first computers to store data in their memory because computers have evolved from using a magnetic drum to a more advanced magnetic core technology.

Third Generation (1964-1971)

The Third generation computers where made remarkable by the invention of the integrated circuit (IC). The efficiency and speed of computers were drastically increased because transistors were now miniaturized.

The use of punched cards and Printouts were not said for the third generation computers. Operators of this computers interacted with the computers through keyboards and monitors. The computers were also interfaced with operating systems that allowed them to carry out different operations at a time. A central program was also used in this computers to monitor their memory.

The third generation of computers where the first computers to be accessible to many people in the public because they were cheaper and smaller than the first and second generation computers.

Fourth Generation (1971-Present)

The fourth generation of computers began with the introduction of microprocessors. Thousands of ICs were now brought together in a silicon chip. Computers that could fill an entire room in the first generation could now be held with the palm.

After the introduction of microprocessors, everything about a computer, the input, output and central processing unit could be established on a small chip. This small computer had new features that allowed them to be linked and connected to form networks. The internet was developed due to this Ability of the fourth generation of computers.

Fifth Generation (present-future)

Computers in this generation are based on artificial intelligence. These computers are still in their developmental stages although Some applications like voice recognition, are already in use today.

Superconductors and parallel processing are the major tools that are helping to make the use of artificial intelligence a reality.

The main aim of developing the fifth generation computers is to create devices that would the able respond to the human language, learn and the capable of self-organization.

Summary

Today we experience the blast move of societal innovation through the help of computer. Without the foundation being laid by our ancesstors, we would not have been able to build a planet that is filled with various computerized technology. Now we see that the developed idea is leading to a smart globe where everything in the world will be able to communicate with each other through IOT technology.

A question that goes through my mind always is this. Did our ancestors invent much technology than we are currently? If so, why and if not, why is it so?

Thank You For Your Time.

Reference For Further Reading

Five Generations

History of Computers

History of computer development

wikipedia-computer

Hello @wisdomdavid

I could remember covering this topic while taking computer as a course during my undergraduate studies. This is for sure a great reminder. To have gone this deep into history to capture the accounts and chains of events that led to what we have today, must have taken a great deal of time, man! And you nailed it!

The shape of early computers by Charles Babbage cracks me up. How crude now but must have been considered a great innovation then. Our world is governed by dynamism, indeed!

@eurogee of @euronation and @steemstem communities

thanks boss! am really honored you stopped by. sorry for the late reply. Been busy lately

Hi @wisdomdavid!

Your post was upvoted by utopian.io in cooperation with steemstem - supporting knowledge, innovation and technological advancement on the Steem Blockchain.

Contribute to Open Source with utopian.io

Learn how to contribute on our website and join the new open source economy.

Want to chat? Join the Utopian Community on Discord https://discord.gg/h52nFrV

So if I had been born in the 1940s, I would be stuck with some gigantic first generation computers that can't even do shit? Thank God I'm born in this era.

Nice piece baba

But remember those that will be born in the next century from normal will call this present computer, innovations and science invention as rubbish. And they are also happy they aren't born now

Truism bro

of course but who can tell the future? it might be worse

Thank God for the opportunity. by now who knows the name you would be bearing!