using AI to measure fan enthusiasm at music festivals and discotheques

Musicians and DJs require realtime audience feedback during performances. However, often we cannot see our audience—their movement, their facial expressions, etc.—during shows due to stage lighting. Therefore we cannot gauge their enthusiasm, and therefore cannot alter our performance to respond.

We at Team Ambience invented a means for measuring such audience enthusiasm using a video camera and artificial intelligence (AI). Our solution reports mood metrics and summarizes crowd movement directly to performers’ phones. (Many musicians, myself included, perform with an iPhone connected to our mic stands).

We developed our prototype from scratch while attending the Amazon- and Intel-sponsored “Hack Till Dawn - A Deep Learning Hackathon: Use Machine Learning to Revolutionize EDC Festivals” event last weekend. At this hacking competition, teams competed to win tickets to the Electric Daisy Carnival (EDC) by developing AI technologies designed to improve fan experiences at large music festivals. By strengthening performers’ ability to “read” the audience in realtime, our invention definitely enhances the fan experience by enabling performers to better craft their shows according to audience sentiment.

EDC will feature Team Ambience’s (our) solution, along with those of two additional winning teams, at three kiosks positioned throughout the festival this coming weekend. Fans will vote on their favorite idea—we certainly encourage you to vote for us!

Demonstration

Reading Audience Facial Expressions

The first video below shows us presenting different facial expressions to performers. The phone in the lower right reports our mood:

Zooming in on the phone application itself, note the change as the music changes:

Reading Audience Movement

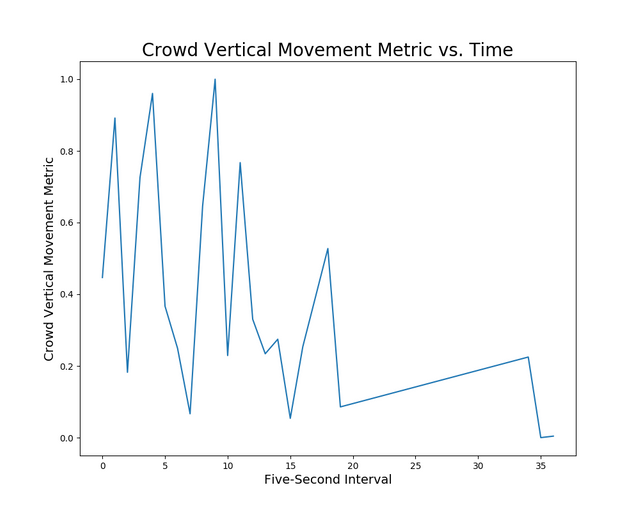

Is the audience jumping, are they swaying? If so, how much? Consider the following video [1]:

From this we measure audience movement intensity and report it:

Additional Applications

The audience metrics described hold value not only to performers, but also to venue owners, booking agencies, and artist managers. We provide a business dashboard for such stakeholders:

Furthermore, as a composer/performer, I envision creating an AI that composes and improvises with me on-the-fly using audience feedback.

Technical Details

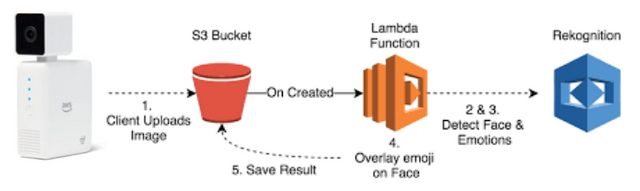

We used the Amazon machine learning stack, along with a DeepLens camera:

AWS DeepLens

We could not have achieved this without a complimentary AWS DeepLens camera (thanks Amazon!). This wireless video camera contains a dedicated machine learning chip, along with preloaded algorithms. Furthermore, you can add your own algorithms. The whole solution is tightly integrated with the rest of the AWS machine learning stack. Click on the image below to go to the DeepLens page. (Disclosure: I am an affiliate marketer for Amazon).

References

- I apologize that I do not know the source of this video.

Whoa! This is absolutely fascinating, though part of my paranoid brain thinks this brings us one step closer to fully automated dance floor's hahah! Great work!

✅ @genderpunk360, congratulations on making your first post! I gave you an upvote!

Please give me a follow and take a moment to read this post regarding commenting and spam.

(tl;dr - if you spam, you will be flagged!)