15 Graphs You Need to See to Understand AI in 2021 - IEEE Spectrum

( April 15, 2021; IEEE Spectrum )

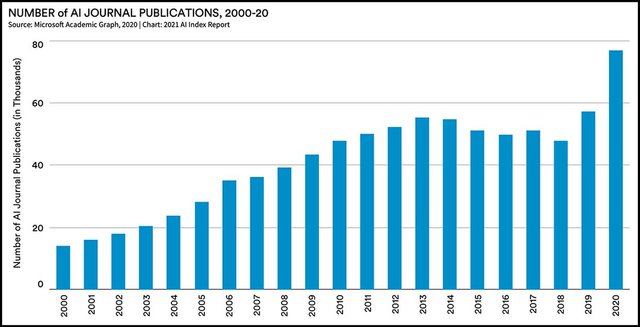

- We’re Living in an AI Summer

- China Takes Top Citation Honors

- Faster Training = Better AI

- AI Doesn’t Understand Coffee Drinking

- Language AI Is So Good, It Needs Harder Tests

...

15. The Diversity Problem, Part 2

To view all fifteen graphs and to read more about the above observations and nine others, click through to IEEE Spectrum: 15 Graphs You Need to See to Understand AI in 2021

Reference:

Also, in case it helps anybody else understand what "Faster Training = Better AI" is getting at:

I initially thought it was implying that there were AI training frameworks that ended up with more accurate classification metrics after briefer periods of training. That's not it!

The point being made is actually that faster training reduces the risk of applying research efforts in any particular direction, which inherently streamlines the idea-to-impact pipeline for researchers. Presumably, the relatively lower cost provided by faster training mechanisms is also helpful.

Thanks for the clarification! From 6.2 minutes to 47 seconds in two years is a pretty remarkable improvement!

The most disturbing and amazing aspect of AI at the moment!

And presumably the technology will need to be used on itself to significant effect to absolve us mere mortals of the inability to detect AI-generated content.Speaking of which, I think I have noticed a ring of accounts here on Steem where the accounts are spinning not-quite-gibberish with an artificial intelligence program like GPT3 and upvoting the posts to outrageous values with a bidbot that they control and then (presumably) using the rewards to boost a competing blockchain. I have the day off from work, so I spent some time last night and this morning writing some code to try to identify other participants. It's still a work in progress.

The interesting part is this. I'm pretty sure this wasn't the programmer's intention, but it turns out that @trufflepig is already halfway decent at picking them out.

Interesting side project!

Also, very intriguing if that algorithm is biased toward AI-generated content.

By "picking them out", do you mean ID'ing in the daily truffle picks?

Yeah, I guess there is enough of a resemblance between posts and they have been drawing enough rewards for long enough that trufflepig is able to recognize that posts like the ones in his list performed well in the past, so based on past performance the current post "should" earn higher rewards. Pretty cool, actually, that one AI found another (if my hunch is right...) He found pretty-much all of the ones I found with my own code (so far, at least).

This is the sort of capability that has always led me to believe that Steem is underappreciated as a training-ground for AI. Large amounts of training data and - with reward payouts on posts, it's got an exact answer. Also, you can rerun the blockchain history as many times as you want for things like handcasting and developing genetic algorithms.

I hadn't thought of the AI training use case. That's a nice idea.

I've also thought about how, with the interplay of incentives designed into the system, and social behavior that is likely informed by established structures using different incentives, it would be a pretty good case study for game theory.

I think it's the only negative impact of AI progress. 🤔

Thanks to sharing this post.