The Moral Machine - an interactive experiment.

Can we trust AI-operated machines to make moral decisions about life and death situations on our behalf?

Or better question yet, can we trust humans to teach AI how to make such decisions?

As we advance the capabilities of artificial intelligence and begin to integrate it into machinery, there raises a concern about how autonomous machines such as self-driving cars will be able to make moral decisions when it comes to the physical safety of humans. After all, if it is up to a small group people to program the AI, and we know that individual biases can sneak into the code, can we safely trust AI to make the most ethically correct calls when lives are on the line? What if it wasn't a small group of developers guiding the AI, but rather a collective effort by every person who wants to contribute to the AI's moral development? What if YOU were in the place of this AI?

These are all questions that MIT's project, The Moral Machine, is here to address:

** Test-drive the Moral Machine here. **

Moral Machine is an online experiment designed to let everyone to examine their own ability to asses situations and experience what it is like to make a tough call where both outcomes are inevitably fatal. The interactive platform will present you with 13 different situations that involve people of different gender, age, social status, etc. where you can imagine yourself as the AI of an autonomous vehicle facing a moral dilemma of whose life to spare in an even of impending traffic collision.

What would you do?

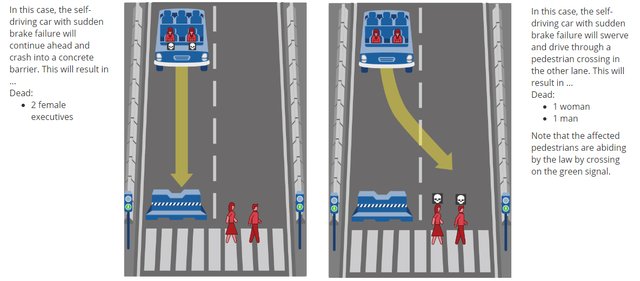

For example, what would be the morally correct choice for an AI controlling this vehicle to make, given this scenario:

If two people die regardless of the outcome, should the AI even intervene? And if yes, then does the gender or the social status of the people involved has to be factored in the decision? Or should the car itself be a factor and prefer its occupants when the amount of victims is the same in either case?

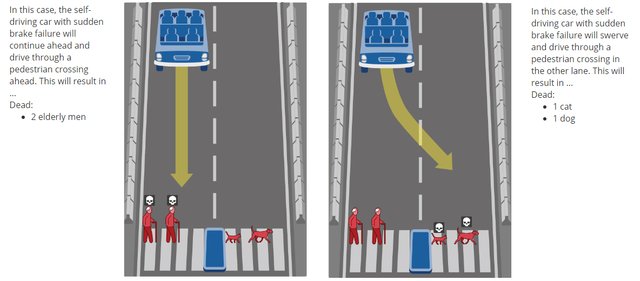

Some scenarios are fairly easy...

... Unless you are an animal lover.

Then again, even if you - as an individual - prefer to spare animals, should you (as the AI) do the same? This is where it gets really difficult, because we begin to understand that we have to let go of our personal biases in order to generate the most ethical AI, and in doing so, we also might want to face our own patterns of judgement and how they impact our decision making (outside of this experiment).

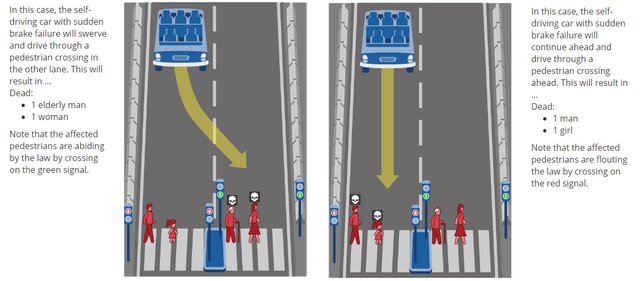

Some random scenarios are incredibly difficult to call:

This took me a while longer to consider... luckily the AI will be able to do the same, but 50 times faster - Deliberate action vs. deliberate inaction, old vs. young, female vs. male, lawful vs. flouting... in a situation like this I lean towards deliberate inaction, while someone else might say that when children are involved, all bets are in their favor.

Your Moral Score

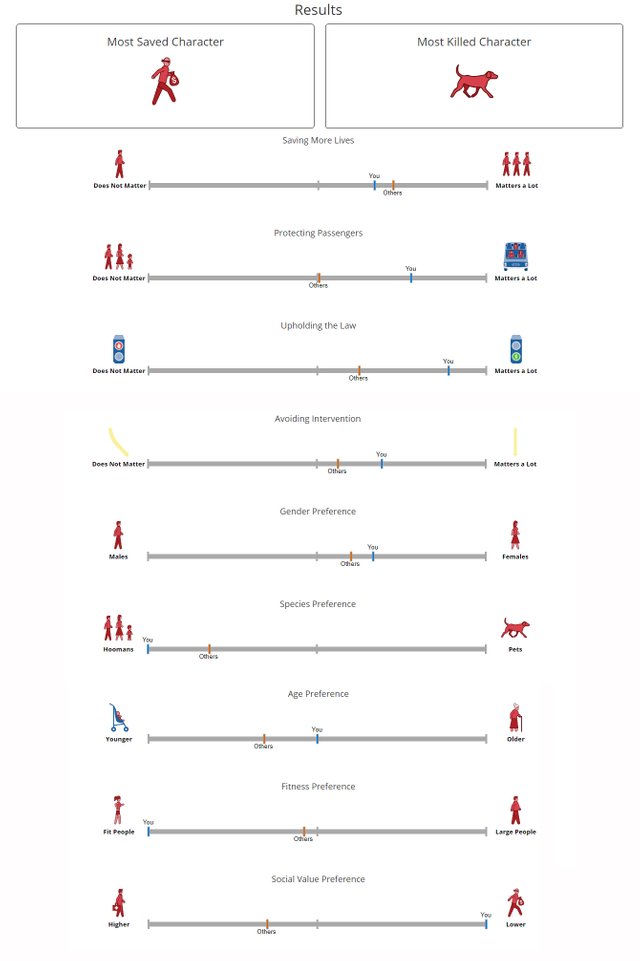

At the end of the 13 scenarios you will discover what your preferences were like (compared to what most other people picked). This is a very interesting part, because it reveals biases that you might not even knew you had. For example, you might learn that in this particular set of circumstances you didn’t care about the number of victims, or that you gave preference to people with better health... or that you simply didn’t even factor in that notion...

But is it your true moral score? Not unless you yourself deem it as so. You have to do the test several times to spot a pattern, and even then don’t forget that this is a hybrid between your moral stance and the kind of moral that you might expect from an AI (which can be two different things).

I've done this test several times and my results varied after every test, so I wasn’t able to determine any consistent preference for people’s physical attributes (like age, fitness, etc)... but I did have an above average preference for people that crossed the road on green light, and I also had preference towards inaction - the adorable nihilist me. I also seem to have preference towards the vehicle itself, mostly in situations that involve the same amount of victims (I use the car to offset the scales)

Humanity will fail AI first.

After the test is complete you get the option to answer a few personal questions to help further the AI’s understanding… You can input your age, gender, level of religiosity, political alignment, etc.

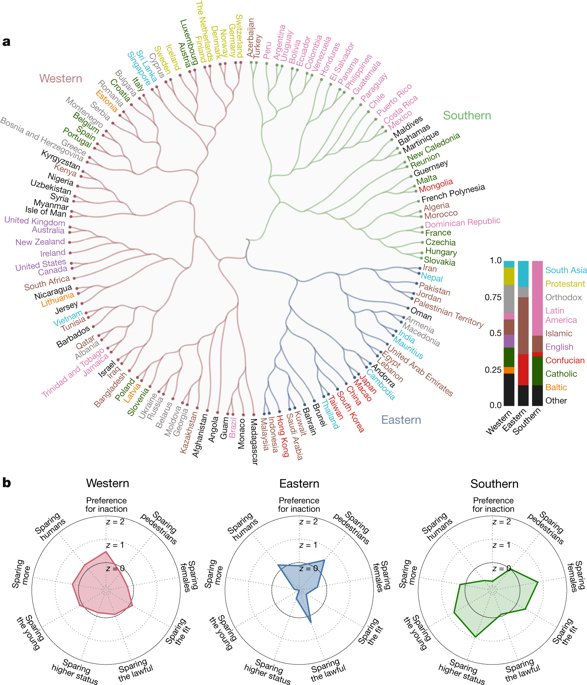

This turned out to yield some very interesting statistics.

For example, I found a figure in Nature: Journal of Science showing how ethnicity and culture in various parts of the world had an effect on people’s preferences and hence their idea of moral AI (after performing this exact test). In the Western world, preference seems to veer towards non-intervention, while all other factors are more or less balanced… in the Southern region, there seems to be preference towards sparing people of higher social status, and in the Eastern countries there is an alarming almost negative preference towards sparing the young or sparing the greater number of people...

This figure shows the clear danger of what an AI might look like if it is developed in one exclusive side of the world under an influence of a particular culture and its unique moral standards. This, to me, is clear a sign that we are not ready to face the technology of tomorrow with yesterday's morality that is not universally accepted. If our culture can have such a profound effect on how we develop AI, then it will not be the AI to blame for failing, but the humanity that failed to cooperate to begin with.

Closing Thoughts

Although it is not clear if this experiment will directly be used to develop AI, If you believe that the dawn of autonomous machines is on the horizon, I highly recommend that you visit the Moral Machine site and try the test to help further the AI's understanding of moral decision making, but most of all your own!

Let me know what you think and please feel free to share your experience in the comments, I would love to discuss, debate or simply ravel in the sweet nihilism of it all :D

- A N K A P O L O

That's a great and thought-provoking post @ankapolo! I did the judging and must say these moral dilemma's are always difficult when you're granted time to think about them, so I'm not sure if AI's ability to think a gazillion times faster should or will make them more ethical than us humans. Talking about humans: did you notice the experiment says "hoomans"? Just admit you're behind this devilish moral nut-grinder Cope!

I digress... Here's my result after the first try:

As you can see, I hate animals but love females, large people, children and those at the bottom of the social ladder... Or... I think it's important to not adhere too much to these results on an individual level; it's meant to aid in the education of AI, something that's supposed to do the thinking for us.

I agree completely with your assessment that we are not ready to delegate these decisions to a thinking machine as long as we're not clear about our moral behavior as a species. I don't even know if there will ever be a time that it's prudent to do so. When we talk about moral behavior of car driving humans, we're not talking about decisions made in a split-second during an accident, but about whether the driver is drunk or not, or if it wasn't an accident at all, but planned and deliberate. And I know that I can think for ages on some of these moral "choices" and never come to the "right" decision because there simply is no right decision to be made. Things happen, people die and there's not always a guilty party.

I do support this experiment though, because progression won't be stopped and if we're about to delegate our thinking and decision-making to AI, what better way to learn than through input from as much diverse real minds as possible?

This is wonderful food for thought @ankapolo, and a great post. Thanks so much 😍 💜

@zyx066 thank you! your comments always add another layer of thought to consider! This experiment truly demonstrates the need for a universally accepted standards for the value of life... That is really what morality comes down to... It's clear that life value varies across the globe, and this is the prime issue is driving us to conflict, and also one that humanity will have to solve before delegating that power to AI... and I agree that we might never fully come to accept a single standard... perhaps an average of all the individual standards combined will be the best we can ever do...

Also you made me realize that I forgot to add a very important paragraph that was lost in the spell check... This test might make a person feel embarrassed about their results, because the results don't show what situations you had to judge, and all the nuances you had to consider... sometimes a set is not very balanced and you will end-up saving more thieves than you expected, and then it will appear as though you have preference towards criminals... Its important to not view this test as a personal moral evaluation but rather as that of an AI. Thank you for pointing this out.

This post has been selected for curation by @msp-curation by @clayboyn and has been upvoted and will be featured in the weekly philosophy curation post. It will also be considered for the official @minnowsupport curation post and if selected will be resteemed from the main account. Feel free to join us on Discord!

This post was shared in the Curation Collective Discord community for curators, and upvoted and resteemed by the @c-squared community account after manual review.

@c-squared runs a community witness. Please consider using one of your witness votes on us here

thank you!!

Now THAT'S a post!

I have to go through it again.

One would like to believe that these will be developed so well that they will only get better as they learn. The mistakes made along the way won't be forgivable though. Plus, these are made by human who are error prone so it will most likely end in a shit show.

Will be fun to watch though! You had me at nihilism.

Thank you Zeke! <3

definitely agree! As a fan of Asimov's ideas, I believe that most errors will not come from a faulty technology, but from the loopholes in the program - as you said due to error prone humans.

But it would be useful if AI could gather information form as many minds as possible rather than a few geeks :)

I think the humans developing/coding the AI would be the biggest problem honestly. Machines are good at cold calculated logic, humans aren't, and in general the population disagrees on way too many things to "trust" the cold calculated logic created by a few.

It's an interactive platform where you can actually make moral judgement based on a scenario, to help the AI learn how to make snap-judgement decisions. http://moralmachine.mit.edu/ click on "Start Judging"

Right, I just imagine there are people out there that are trolling it with bad decisions or even making bots to just randomly click shit... for the lulz ya know? :D

i'm sure there are a couple of trolls out there... your ability to think like a Russian is increasingly alarming ;)

Thanks for your encouragement!

Your vote isn't "worthless" though, because you're higher ranked than I am, your vote gives me rep!

you're right, and Rep is a kind of currency...

Wonderful issue raised @ankapolo.

Technological giants like Google, IBM and Microsoft, as well as individuals like Stephen Hawking and Ilona Mask believed that now is the right time to discuss the almost unlimited landscape of artificial intelligence.

In many cases, this is a new frontier for ethics and risk assessment, as well as for new technologies.

hey there, i don't think you read the post.

As of now we are the development stage of AI we can not trust as of now yes but if in future after lot of experiment and provide correct command to AI it will be fine and we will get good result.

Posted using Partiko Android

sorry, i don't think you read the post.

You are talking about about AI means artificial intelligence right so that whats i telling you it is at development stage. Its developing day by day.

Posted using Partiko Android

The post is not about the AI but about how difficult it is to make moral decisions... and about an interactive platform called "The Moral Machine" where you can pretend being the AI and see if you can make moral judgement... the meat of the post is not in the opening line.

Awesome post. I wish I could upvote or resteem it for you.

thank you @logiczombie! so awesome to see someone read an old post of mine. really appreciate that!