The ethics of future autonomous war machines

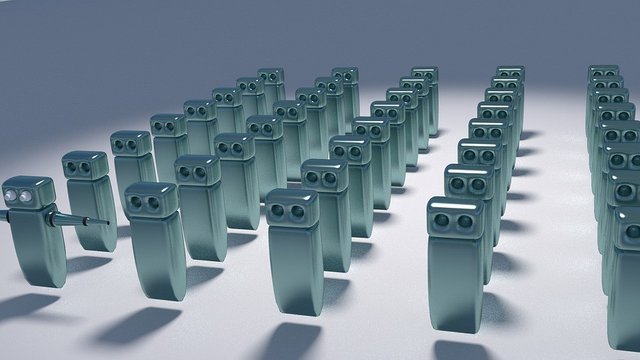

The most disturbing area where machines can make autonomous decisions is the military. For the time being, machines capable of killing people who are controlled by computers, the so-called LAR (Lethal Autonomous Robots, for its acronym in English) are already feasible.

Although governments with the capacity to produce LAR have indicated that they do not contemplate its use during armed conflicts or other situations, we are not sure that this situation will continue to be controlled.

Ethics for the machine

For that reason, in 2008, the US Navy already commissioned the Ethics and Emerging Sciences Group of the Polytechnic University of California to create a report that will review the ethical issues that LARs bring. The report also presents the two possible approaches for the construction of ethical autonomous robots: from top to bottom and from bottom to top.

In the top-down approach, all the rules that govern system decisions are programmed in advance, and the robot simply obeys the rules "without change or flexibility." The biggest problem with this approach is that it is not easy to foresee all the circumstances.

In the bottom-up approach, the robot is programmed with a few rudimentary rules and is allowed to interact with the world. Using machine learning techniques, he will develop his own moral code, adapting it to new situations as they appear.

In theory, the more dilemmas the system faces, the more exhaustive will be its moral judgments. But the bottom-up approach offers even more thorny problems, as Nicholas Carr objects in his book Caught:

In the first place, it is impracticable; We still have to invent machine learning algorithms subtle and solid enough for moral decision making. Second, there is no room for trial and error in life or death situations; the approach itself would be immoral. Finally, there is no guarantee that morality developed by a computer reflects or keeps harmony with human morality.

While all devices still operate under human supervision, robots such as the SGR-A1, manufactured by Samsung Techwin, have algorithms capable of distinguishing between military and unarmed civilians and taking measures based on that identification: for example, firing rubber bullets. The robot can also light up intruders or request a password to verify that it is not disoriented friendly soldiers. Currently, on the border between the two Koreas, the South is already using these semi-autonomous surveillance units.