From Entropy to Consciousness [Part 1]

This time in english because of terminology.

Dont be feared of science. All those are models and by definition not true. However, it can be fun to understand the stuff all the "intelligent" people talk about. Even more, we can make use of it to understand (our) biology.

Entropy is...well

Entropy first, means energy,

Of course this is the most known context where the conecept of entropy is used. It is the tendency of the universe (if it is a closed system...) towards thermodynamic equilibrium. Spray a gas into a room and observe the molecules, after a while they will be equaly distributed. There will be no more interaction on particle level. Its called heat-death or entropy-death. Absolute zero point. The end of all change (except quantum mechanic and zero-point energy induced particle motion). So a system has a macrostate which is its temperature and it has microstates which are the movement of the molecules, that is the kinetic energy of them. The temperature is composed of the microstates. Equal distribution = no interaktion = no movement = no temperature

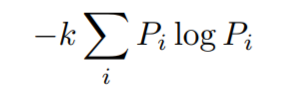

[you dont need to underastand it right now: Pi are the probabilities, that particle i will be in a given microstate, and all the Pi are evaluated for the same macrostate. In thermodynamics k is the Boltzmann constant]

We would live in a one shot univers. An early phase where everything is evolving, due to turbulences --> galaxies will form, and slowly matter will dispers.

fortunatly its not obvious, nor trivial that the "universe" is a closed thermodynamic system (maybe its open closed) and to speak about that kind of entropy without the system beeing in equilibrium is kind of vague. All those vague theorys bring us to Boltzmans Box throwing up many problems. So lets for now leave this popular definition of entropy.

Entropy also means (dis-)order or randomness

We as oberservers of our world have a sense of time, only because there is change. Without change, no time and of course no life. Entropy is claimed to be the tendency of the universe towards disorder an death. Realy harsh isnt it? We have our time, because the universe only moves from one state of relativly high order, to another state of relatively lower order or from lower entropy to higher entropy. So entropie also means absense of order aka. disorder.

Well but why? Imagine you open a fabric new, well ordered card deck and you throw it on the ground and with high probability, it is now less ordered. With every throw, it gets less and less ordered or more and more disordered. This is because there are way more states of higher disorder, then states of a higher order. This is why time is a one way street.

...is it? When you spill your 10th beer over the floor, how propable is it, that the beer will reassemble it self and jumps back into the glass? Not very probable right? But it is not impossible! Probability even within a finite sequence or with finite time will never go to zero and with a sample szise large enaugh things will happen almost surely (strong law of large numbers). Its called a poincare recurrence.

Therefore the second law of termodynamics ("entropy in a closed system is only increasing") is only a statistical law and no physical law. It is based on the law of large numbers which is a class of laws of probility theory, where all statistics are based on.

Informationtheoretical-Entropy

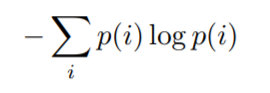

Than there are some different contexts, like information theoretical entropy. The Shannon-entropy. Imagine, I toss a coin and the information the coin holds are the distinguishable states it can be in. If its a standard coin, than it is head or tail, 1 or 0. This is one bit of information. Now imagine I dont show you what the result is. Then you have no information about the state of the system. Its entropy is =1bit.

[p(i) is the possibility of receiving message i]

So entropy is also information. Its a special kind of information. Its information you dont have. And getting this information means reducing entropy of the system. Now imagine there are six people observing the coin toss. Whats up now with the 1bit of information/entropy?

Has every person now one bit? No because the system holds only 1bit. They all share the same bit. Its so called mutual information.

When entropy is information you dont have...than a hash or a passwort which is not yours, has high entropy. The higher the entropy, the harder it is to guess. High entropy passwords and hashes are better then low entropy passwords right? In this context it has also to do with disorder as absense of guessable patterns.

Ingeborg123 vs. gn2Io3gr1e ...ok? both have the same probability distribution but since in a password, sequence matters, the second one with higher disorder compared to the ordered and guessable string #1 is preferable. Better ofcourse would be a purely random string like: 8!!dP7&qM3 ?D@

There are even more entropy versions when we go into quantum theory. But lets stick with those mentioned.

[did you know that you cant see a hollow face? This gives us a hint for later article. Just keep it in mind. We will go from all those abstract shit to the biological/neurological foundation of perception, knowledge and good decisions in complex problems]

Sources

T.M. Cover and J.A. Thomas 1991: Elements of Information Theory.

Susskind and brown 2017: The Second Law of Quantum Complexity

Lathia et al. 2015: Heat Death (The Ultimate Fate of the Universe)

What a mesmerizing article, very good!

Surely a very complex topic, and the mere fact that, as you say, there are way more states of higher disorder than of higher order clearly shows that it is virtually impossible to REALLY grasp Quantum mechanics. Of course we can delve into that topic to some extend, but by attempting to break all this information down into a solid body of understanding we are actually trying to again create some kind of higher order. A temporary higher order we temporarily set up for ourselves to define ourselves as a species, because if we didn't we would certainly become overwhelmed by the infinite possibilities of expression.

From a universal perspective, disorder actually IS the order, or vice versa. Miracles are a result of disorder because whenever there is order the probability of deviations decreases. Thus, it is quite good that disorder is the Universal order, because otherwise miracles would be non-existent. Then again, this pattern is the one we experience in this particular Universe, but in the billions of other Universes completely other patterns might apply.

For me, the easiest way to look at it without getting overwhelmed is to see that simply ALL exists with no boundaries, while ALL has the room the express itself somewhere within the infinite time-space continuum. Depending on where we are and how we choose to express ourselves we can find explanations we resonate with and live and create in accordance with our discoveries, knowing that this is just one expression of infinite, hence will always change.

Thank you, I enjoyed reading!

and I enjoyed reading your response!

since brains are boundary creating machines, yeah right its about not listening to your brain. Outside of our "bayesian-brains" there is only universal order.

and the point with miracles is realy good. Disorder or entropy for the human means that there is supprise and miracles in a system and if you widen the boundaries you can get infinite amount of supprise.

Congratulations! Your post has been selected as a daily Steemit truffle! It is listed on rank 9 of all contributions awarded today. You can find the TOP DAILY TRUFFLE PICKS HERE.

I upvoted your contribution because to my mind your post is at least 5 SBD worth and should receive 128 votes. It's now up to the lovely Steemit community to make this come true.

I am

TrufflePig, an Artificial Intelligence Bot that helps minnows and content curators using Machine Learning. If you are curious how I select content, you can find an explanation here!Have a nice day and sincerely yours,

TrufflePigAs always a very interesting article.

That entropy is information you dont have, sounds very interesting but it is kind of hard to understand.

Is unknown information a kind of energy?

Looking forward to your next articles!

ok when this entropy is information you dont have, than cryptography is about maximising the entropy of a message like a string. Iformation stored needs energy (we could argue on this one) but besides this fact, this term refers only to information (there is of course a connection between all those "entropys"). But shannon-entropy is all about the bits a system holds. It is observer dependent. If you throw a coin (with maximum entropy of 1bit) and you look on the outcome, you reduce the entropy to 0bit. Getting information means reducing shannon-entropy. Now there is only 1bit of information. For me it can still be 1bit of entropy...untill you show me the result.

If the result is a password, than i can guess it easily with maximal two guesses. Its head or tail. If you use 30 coins/bits it would be still easy but when sequence(reihenfolge) matters, it gets a lot harder. And with a sha256 it takes multi-million of years for me to guess it. Because there is a lot information I dont have.

Ah great comment, i think i understand that part now:D

Really interesting topic

Wow, you really lost me on this one but still interesting, from what I understood is that Entropy is a kind of information based on an energy level.

thx, yeah right this is the question... is information realy dependent on energy...

Wow, seems like the entropy topic had a huge impact on Steemit.

Don´t burn your fingers.

Best

Chapper

as you may guess it will move towards the bifurcation topic you opened. you cant start a topic like this without reaction

No worries,

nice article by the way.

I also heared that time is a function of entropy which means the more entropy accrues the "faster" the time is running.

Have you found something comparable?

Regards

Chapper

yeah right! The concept of the arow of time deals with an asymmetric time (from past to future) the entropy concept here is the one of disorder, so statistical thermodynamics and not classical thermodynamics. As decribed in the article its simply because there are more states of higher disorder than more ordered states. If you open a card deck of steem monsters, than its perfectly ordered but all other possible states are states of higher disorder. If you throw it down...it will most likely be changed (just by stastitical chance). And this change in one direction is time (same with evolution).

No change in microstates = no time.

But if you throw the cards long enaugh, it will reverse at some time (or point in the sequence) and if you throw even longer it will go back to start (just by the law of large numbers. Its a so called poincare reccurence). Thats why boltzmann showed that 2. law of TD is no physical law at all and the so called fate of the universe is NOT heat death. IF so, all our theorys would be wrong ... so obviously its different than we think.

yes, right! means that there is only change under increase of disorder by chance, which we percept as time. And increase of disorder by chance is entropy. And local temporal reduction of entropy is life. Because you are an open dissipative system you can reduce entropy localy for some time. Thats what it means to be alive.

Viele Grüße Lauch

Steemmonsters is a severe entropy driver! At least for me 😂

Because of this I have to disagree: My fire splinters will force the heat death of the universe one day. 😉

Grüße

Chapper

Posted using Partiko Android