Google cooperates with the military again. Where is the boundary of AI ethics? - Does the machine control humans?

The story begins in early March this year.

In March of this year, Google was unveiled that it has reached a cooperation with the US Department of Defense (Pentagon) to help the latter develop an artificial intelligence system for drones, project codenamed Project Maven.

As soon as the news broke out, the company’s employees expressed strong dissatisfaction with the project. Some employees believe that Google is opening up resources for the Pentagon to help the latter to create drone surveillance technologies. Some people also question whether Google is using the machine learning technology to meet ethical standards. They think that the Pentagon will use this technology for killing. Sexual weapons, in turn, brought about the harm that technology optimists never wanted to cause.

Subsequently, Google's more than 3,100 employees jointly filed a petition to the CEO, Sundar Pichai, to protest.

Things will be further fermented by the end of April. Some media have found that Google has deleted three points of its eight-year motto "Don't be evil" in the beginning of the company's code of conduct. At the end of the guideline, there is a sentence that has not yet been deleted: "Remember, don't be evil. If you see something that you think is not correct, speak out!"

At the weekly "weather forecast" regular meeting on Friday, Google Cloud CEO Diane Greene announced that Google will end Project Marven's cooperation with the U.S. Department of Defense after the contract expires. .

This is undoubtedly a major event. "The news of victory" was crazy inside Google. With an article rushing out, this matter seems to have ended temporarily with Google's "compromise with employees and stop renewal with the Department of Defense."

But today, Google CEO Sundar Pichai published an article titled "AI at Google: our principles", which lists seven guiding principles and points out that Google will not terminate its cooperation with the US military. The reversal of Google is embarrassing. Although it clarifies "the application of AI that will not be pursued," technology wickedness is still a problem of human evil, and AI ethics once again leads people to think deeply.

Where are the boundaries of AI ethics?

If Google has not been very flat in the recent past, then Amazon Alexa's life will not be easy.

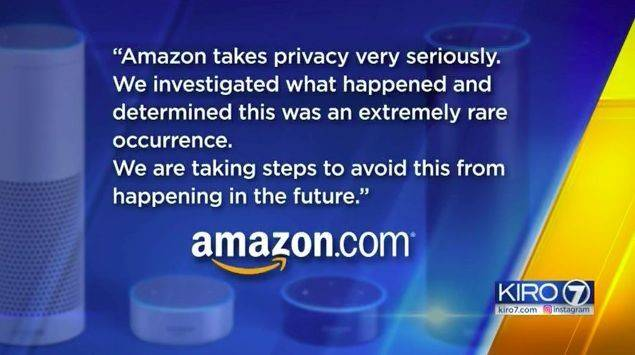

Amazon’s Echo device was accused of recording private conversations without permission and sending audio to a random person in the user’s contact list. This is the last time Alexa was exposed to the scary events of the "ridicule humans" incident.

This is far from an example. As early as 2016, a person named 19-year-old girl named Tay chats on Twitter. This Microsoft-developed artificial intelligence employs natural language learning technology that can manipulate and imitate human conversations by grabbing data that interacts with the user and chat with jokes, paragraphs, and expressions like humans. However, less than a day after being on the line, Tay was "tuned" to become an extremist who yelled at ethnic cleansing and brutishness. Microsoft had to take it down on the grounds of "system upgrade".

This really makes people think carefully. According to Ke Ming, an aixdlun analyst, the AI ethical issues will be increasingly taken into account as the ills of AI are highlighted. Where exactly is the boundary of AI ethics? First of all, several issues should be clarified.

Does the robot become a civil subject?

With the rapid development of artificial intelligence technology, robots have more and more powerful intelligence. The gap between machines and humans has also gradually narrowed, and the robots that will emerge in the future will have biological brains that can even rival the number of neurons in the human brain. U.S. Future U.S. futurists even predict that in the middle of this century, non-biological intelligence will be 1 billion times more than anyone today.

Citizenship does not seem to be a problem for robots anymore. In October last year, the birth of Sophia, the world’s first citizenship robot, meant that human creation possessed an identity equal to that of human beings, as well as the rights, duties, and social status behind their identities.

The legal civil subject qualification is still the dividing line of AI ethics. In the past period of time, philosophers, scientists, and lawmakers from the United States, Britain, and other countries have all engaged in heated debates. In 2016, the European Commission’s Legal Affairs Commission submitted a motion to the European Commission requesting that the status of the most advanced automated robot be identified as “electronic persons”, and besides giving them “specific rights and obligations”, it is also recommended to be intelligent. Automated robots register to pay for their taxes, contributions, and pension funds. If the legal motion is passed, it will undoubtedly cause a shake-up in the traditional civil subject system.

Strictly speaking, a robot is not a natural person with life, but is also distinguished from a legal person who has his own independent will and acts as a collection of natural persons. It is indeed premature to attempt to convict AI itself of the robot's misconduct.

The discrimination of the algorithm will be unfair

One of the accusations that artificial intelligence makes mistakes in judgment is that it often "discriminates." Google, which uses the most advanced image recognition technology, was once accused of "racial discrimination" because its search engine would label blacks as "orangutans"; while searching for "unprofessional hairstyles", most of them were black people. braid. Ratanya Sweeney, a professor of data privacy at Harvard University, found that searching Google for names with "black characters" is likely to pop up ads related to criminal records - based on results from Google's smart advertising tool Adsense.

And the danger is not just "seeing each other" itself - after all, it is a bit offensive to label a black photograph as an orangutan. The decision-making of artificial intelligence is moving into more fields that are actually related to the fate of individuals, effectively affecting employment, welfare, and personal credit. We can hardly turn a blind eye to the “unfairness” in these areas.

Similarly, as AI invades the recruitment field, financial field, intelligent search field, and so on, we can really do nothing about the “algorithm machines” we train. In a thirsty modern society, whether the algorithm can help the company choose the one who is one in a thousand, this remains to be seen.

So where is the source of discrimination? Is the ulterior motive of the labeler, the deviation of the data fitting, or is there a bug in the program design? Can the results calculated by the machine provide grounds for discrimination, inequality, and cruelty? These are questions that are worth discussing.

Data Protection is the Bottom Line of AI Ethics

Cyberspace is a real virtual existence, an independent world without physical space. Here, humans have achieved "digital survival" with the separation of the flesh and possessed "digital personality." The so-called digitized personality is the “personal image in the cyberspace through the collection and processing of personal information”—that is, the personality that is established through digital information.

In the AI environment, based on the support of the Internet and big data, it has a large number of user habits and data information. If "accumulation of historical data" is the basis of machine evil, then the driving force of capital is a deeper reason.

In the event of Facebook information leakage, a company called Cambridge Analytica used artificial intelligence technology to place paid political advertisements on the psychological characteristics of any potential voter; what kind of advertisements were cast depends on one’s politics. Tendencies, emotional characteristics, and the degree of vulnerability. Many false news can quickly spread, increase exposure in certain groups of people, and subtly affect people's value judgments. The technology leader, Christopher Willie, recently revealed to the media the source of the "food" of this artificial intelligence technology - in the name of academic research, more than 50 million user data were intended to be captured.

Retiringly, even if there is no data leakage problem, the so-called “smart digging” of user data is also very easy to swim on the edge of “compliance” but “beyond fairness”. As for the boundaries of AI ethics, information security has become the most basic bottom line of "information people" in every Internet age.

Reflection

In a recent video about AI ethics in the fire, the artist Alexander Reben did not have any action, but gave an order via voice assistant: "OK Google, shoot."

However, in less than a second of instant, Google Assistant pulled the trigger of a pistol and knocked down a red apple. Immediately, the buzzer makes a harsh hum.

The buzz resounded through both ears.

Who shot the apple? Is AI or human?

In this video, Reben tells AI to shoot. Engadget said in the report that if AI is smart enough to anticipate our needs, perhaps someday AI will take the initiative to get rid of those who make us unhappy. Reben said that discussing such a device is more important than the existence of such a device.

Artificial intelligence is not a predictable, perfect rational machine. His ethical deficiencies are algorithms, people's goals and assessments. However, at least for now, the machine is still the response of the human real world, not the guidance and pioneer of the “ought to be” world.

Obviously, keeping the AI ethics at the bottom line, humans will not go to the day of "machine tyranny."

Google's "seven guidelines"

Good for society

Avoid creating or enhancing prejudices

Establish and test for safety

Obligation to people

Integrated privacy design principles

Adhere to high standards of scientific exploration

Determine the appropriate application based on the principle